Leibniz integral rule

| Part of a series of articles about |

| Calculus |

|---|

In calculus, the Leibniz integral rule for differentiation under the integral sign states that for an integral of the form [math]\displaystyle{ \int_{a(x)}^{b(x)} f(x,t)\,dt, }[/math] where [math]\displaystyle{ -\infty \lt a(x), b(x) \lt \infty }[/math] and the integrands are functions dependent on [math]\displaystyle{ x, }[/math] the derivative of this integral is expressible as [math]\displaystyle{ \begin{align} & \frac{d}{dx} \left (\int_{a(x)}^{b(x)} f(x,t)\,dt \right ) \\ &= f\big(x,b(x)\big)\cdot \frac{d}{dx} b(x) - f\big(x,a(x)\big)\cdot \frac{d}{dx} a(x) + \int_{a(x)}^{b(x)}\frac{\partial}{\partial x} f(x,t) \,dt \end{align} }[/math] where the partial derivative [math]\displaystyle{ \tfrac{\partial}{\partial x} }[/math] indicates that inside the integral, only the variation of [math]\displaystyle{ f(x, t) }[/math] with [math]\displaystyle{ x }[/math] is considered in taking the derivative.[1] It is named after Gottfried Leibniz.

In the special case where the functions [math]\displaystyle{ a(x) }[/math] and [math]\displaystyle{ b(x) }[/math] are constants [math]\displaystyle{ a(x)=a }[/math] and [math]\displaystyle{ b(x)=b }[/math] with values that do not depend on [math]\displaystyle{ x, }[/math] this simplifies to: [math]\displaystyle{ \frac{d}{dx} \left(\int_a^b f(x,t)\,dt \right)= \int_a^b \frac{\partial}{\partial x} f(x,t) \,dt. }[/math]

If [math]\displaystyle{ a(x)=a }[/math] is constant and [math]\displaystyle{ b(x)=x }[/math], which is another common situation (for example, in the proof of Cauchy's repeated integration formula), the Leibniz integral rule becomes: [math]\displaystyle{ \frac{d}{dx} \left (\int_a^x f(x,t) \, dt \right )= f\big(x,x\big) + \int_a^x \frac{\partial}{\partial x} f(x,t) \, dt, }[/math]

This important result may, under certain conditions, be used to interchange the integral and partial differential operators, and is particularly useful in the differentiation of integral transforms. An example of such is the moment generating function in probability theory, a variation of the Laplace transform, which can be differentiated to generate the moments of a random variable. Whether Leibniz's integral rule applies is essentially a question about the interchange of limits.

General form: differentiation under the integral sign

Theorem — Let [math]\displaystyle{ f(x,t) }[/math] be a function such that both [math]\displaystyle{ f(x,t) }[/math] and its partial derivative [math]\displaystyle{ f_x(x, t) }[/math] are continuous in [math]\displaystyle{ t }[/math] and [math]\displaystyle{ x }[/math] in some region of the [math]\displaystyle{ xt }[/math]-plane, including [math]\displaystyle{ a(x) \leq t \leq b(x), }[/math] [math]\displaystyle{ x_0 \leq x \leq x_1. }[/math] Also suppose that the functions [math]\displaystyle{ a(x) }[/math] and [math]\displaystyle{ b(x) }[/math] are both continuous and both have continuous derivatives for [math]\displaystyle{ x_0 \leq x \leq x_1. }[/math] Then, for [math]\displaystyle{ x_0 \leq x \leq x_1, }[/math] [math]\displaystyle{ \frac{d}{dx} \left(\int_{a(x)}^{b(x)} f(x, t) \,dt\right) = f\big(x, b(x)\big) \cdot \frac{d}{dx} b(x) - f\big(x, a(x)\big) \cdot \frac{d}{dx} a(x) + \int_{a(x)}^{b(x)} \frac{\partial}{\partial x} f(x, t) \,dt. }[/math]

The right hand side may also be written using Lagrange's notation as: [math]\displaystyle{ f(x, b(x)) \, b^\prime(x) - f(x, a(x)) \, a^\prime(x) + \displaystyle\int_{a(x)}^{b(x)} f_x(x, t) \,dt. }[/math]

Stronger versions of the theorem only require that the partial derivative exist almost everywhere, and not that it be continuous.[2] This formula is the general form of the Leibniz integral rule and can be derived using the fundamental theorem of calculus. The (first) fundamental theorem of calculus is just the particular case of the above formula where [math]\displaystyle{ a(x) = a \in \Reals }[/math] is constant, [math]\displaystyle{ b(x) = x, }[/math] and [math]\displaystyle{ f(x, t) = f(t) }[/math] does not depend on [math]\displaystyle{ x. }[/math]

If both upper and lower limits are taken as constants, then the formula takes the shape of an operator equation: [math]\displaystyle{ \mathcal{I}_t \partial_x = \partial_x \mathcal{I}_t }[/math] where [math]\displaystyle{ \partial_x }[/math] is the partial derivative with respect to [math]\displaystyle{ x }[/math] and [math]\displaystyle{ \mathcal{I}_t }[/math] is the integral operator with respect to [math]\displaystyle{ t }[/math] over a fixed interval. That is, it is related to the symmetry of second derivatives, but involving integrals as well as derivatives. This case is also known as the Leibniz integral rule.

The following three basic theorems on the interchange of limits are essentially equivalent:

- the interchange of a derivative and an integral (differentiation under the integral sign; i.e., Leibniz integral rule);

- the change of order of partial derivatives;

- the change of order of integration (integration under the integral sign; i.e., Fubini's theorem).

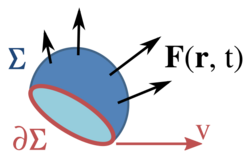

Three-dimensional, time-dependent case

A Leibniz integral rule for a two dimensional surface moving in three dimensional space is[3][4]

[math]\displaystyle{ \frac {d}{dt} \iint_{\Sigma (t)} \mathbf{F} (\mathbf{r}, t) \cdot d \mathbf{A} = \iint_{\Sigma (t)} \left(\mathbf{F}_t (\mathbf{r}, t) + \left[\nabla \cdot \mathbf{F} (\mathbf{r}, t) \right] \mathbf{v} \right) \cdot d \mathbf{A} - \oint_{\partial \Sigma (t)} \left[ \mathbf{v} \times \mathbf{F} ( \mathbf{r}, t) \right] \cdot d \mathbf{s}, }[/math]

where:

- F(r, t) is a vector field at the spatial position r at time t,

- Σ is a surface bounded by the closed curve ∂Σ,

- dA is a vector element of the surface Σ,

- ds is a vector element of the curve ∂Σ,

- v is the velocity of movement of the region Σ,

- ∇⋅ is the vector divergence,

- × is the vector cross product,

- The double integrals are surface integrals over the surface Σ, and the line integral is over the bounding curve ∂Σ.

Higher dimensions

The Leibniz integral rule can be extended to multidimensional integrals. In two and three dimensions, this rule is better known from the field of fluid dynamics as the Reynolds transport theorem: [math]\displaystyle{ \frac{d}{dt} \int_{D(t)} F(\mathbf x, t) \,dV = \int_{D(t)} \frac{\partial}{\partial t} F(\mathbf x, t)\,dV + \int_{\partial D(t)} F(\mathbf x, t) \mathbf v_b \cdot d\mathbf{\Sigma}, }[/math]

where [math]\displaystyle{ F(\mathbf x, t) }[/math] is a scalar function, D(t) and ∂D(t) denote a time-varying connected region of R3 and its boundary, respectively, [math]\displaystyle{ \mathbf v_b }[/math] is the Eulerian velocity of the boundary (see Lagrangian and Eulerian coordinates) and dΣ = n dS is the unit normal component of the surface element.

The general statement of the Leibniz integral rule requires concepts from differential geometry, specifically differential forms, exterior derivatives, wedge products and interior products. With those tools, the Leibniz integral rule in n dimensions is[4] [math]\displaystyle{ \frac{d}{dt}\int_{\Omega(t)}\omega=\int_{\Omega(t)} i_{\mathbf v}(d_x\omega)+\int_{\partial \Omega(t)} i_{\mathbf v} \omega + \int_{\Omega(t)} \dot{\omega}, }[/math] where Ω(t) is a time-varying domain of integration, ω is a p-form, [math]\displaystyle{ \mathbf v=\frac{\partial \mathbf x}{\partial t} }[/math] is the vector field of the velocity, [math]\displaystyle{ i_{\mathbf v} }[/math] denotes the interior product with [math]\displaystyle{ \mathbf v }[/math], dxω is the exterior derivative of ω with respect to the space variables only and [math]\displaystyle{ \dot{\omega} }[/math] is the time derivative of ω.

However, all of these identities can be derived from a most general statement about Lie derivatives: [math]\displaystyle{ \left.\frac{d}{dt}\right|_{t=0}\int_{\operatorname{im}_{\psi_t}(\Omega)} \omega = \int_{\Omega} \mathcal{L}_\Psi \omega, }[/math]

Here, the ambient manifold on which the differential form [math]\displaystyle{ \omega }[/math] lives includes both space and time.

- [math]\displaystyle{ \Omega }[/math] is the region of integration (a submanifold) at a given instant (it does not depend on [math]\displaystyle{ t }[/math], since its parametrization as a submanifold defines its position in time),

- [math]\displaystyle{ \mathcal{L} }[/math] is the Lie derivative,

- [math]\displaystyle{ \Psi }[/math] is the spacetime vector field obtained from adding the unitary vector field in the direction of time to the purely spatial vector field [math]\displaystyle{ \mathbf v }[/math] from the previous formulas (i.e, [math]\displaystyle{ \Psi }[/math] is the spacetime velocity of [math]\displaystyle{ \Omega }[/math]),

- [math]\displaystyle{ \psi_t }[/math] is a diffeomorphism from the one-parameter group generated by the flow of [math]\displaystyle{ \Psi }[/math], and

- [math]\displaystyle{ \text{im}_{\psi_t}(\Omega) }[/math] is the image of [math]\displaystyle{ \Omega }[/math] under such diffeomorphism.

Something remarkable about this form, is that it can account for the case when [math]\displaystyle{ \Omega }[/math] changes its shape and size over time, since such deformations are fully determined by [math]\displaystyle{ \Psi }[/math].

Measure theory statement

Let [math]\displaystyle{ X }[/math] be an open subset of [math]\displaystyle{ \mathbf{R} }[/math], and [math]\displaystyle{ \Omega }[/math] be a measure space. Suppose [math]\displaystyle{ f\colon X \times \Omega \to \mathbf{R} }[/math] satisfies the following conditions:[5][6][2]

- [math]\displaystyle{ f(x,\omega) }[/math] is a Lebesgue-integrable function of [math]\displaystyle{ \omega }[/math] for each [math]\displaystyle{ x \in X }[/math].

- For almost all [math]\displaystyle{ \omega \in \Omega }[/math] , the partial derivative [math]\displaystyle{ f_x }[/math] exists for all [math]\displaystyle{ x \in X }[/math].

- There is an integrable function [math]\displaystyle{ \theta \colon \Omega \to \mathbf{R} }[/math] such that [math]\displaystyle{ |f_x(x,\omega)| \leq \theta ( \omega) }[/math] for all [math]\displaystyle{ x \in X }[/math] and almost every [math]\displaystyle{ \omega \in \Omega }[/math].

Then, for all [math]\displaystyle{ x \in X }[/math], [math]\displaystyle{ \frac{d}{dx} \int_\Omega f(x, \omega) \, d\omega = \int_{\Omega} f_x (x, \omega) \, d\omega. }[/math]

The proof relies on the dominated convergence theorem and the mean value theorem (details below).

Proofs

Proof of basic form

We first prove the case of constant limits of integration a and b.

We use Fubini's theorem to change the order of integration. For every x and h, such that h > 0 and both x and x +h are within [x0,x1], we have: [math]\displaystyle{ \int_x^{x+h} \int_a^b f_x(x,t) \,dt \,dx = \int_a^b \int_x^{x+h} f_x(x,t) \,dx \,dt = \int_a^b \left(f(x+h,t) - f(x,t)\right) \,dt = \int_a^b f(x+h,t) \,dt - \int_a^b f(x,t) \,dt }[/math]

Note that the integrals at hand are well defined since [math]\displaystyle{ f_x(x,t) }[/math] is continuous at the closed rectangle [math]\displaystyle{ [x_0, x_1] \times [a,b] }[/math] and thus also uniformly continuous there; thus its integrals by either dt or dx are continuous in the other variable and also integrable by it (essentially this is because for uniformly continuous functions, one may pass the limit through the integration sign, as elaborated below).

Therefore: [math]\displaystyle{ \frac{\int_a^b f(x+h,t) \,dt - \int_a^b f(x,t) \,dt }{h} = \frac{1}{h}\int_x^{x+h} \int_a^b f_x(x,t) \,dt \,dx = \frac{F(x+h)-F(x)}{h} }[/math]

Where we have defined: [math]\displaystyle{ F(u) \equiv \int_{x_0}^{u} \int_a^b f_x(x,t) \,dt \,dx }[/math] (we may replace x0 here by any other point between x0 and x)

F is differentiable with derivative [math]\displaystyle{ \int_a^b f_x(x,t) \,dt }[/math], so we can take the limit where h approaches zero. For the left hand side this limit is: [math]\displaystyle{ \frac{d}{dx}\int_a^b f(x,t) \, dt }[/math]

For the right hand side, we get: [math]\displaystyle{ F'(x) = \int_a^b f_x(x,t) \, dt }[/math] And we thus prove the desired result: [math]\displaystyle{ \frac{d}{dx}\int_a^b f(x,t) \, dt = \int_a^b f_x(x,t) \, dt }[/math]

Another proof using the bounded convergence theorem

If the integrals at hand are Lebesgue integrals, we may use the bounded convergence theorem (valid for these integrals, but not for Riemann integrals) in order to show that the limit can be passed through the integral sign.

Note that this proof is weaker in the sense that it only shows that fx(x,t) is Lebesgue integrable, but not that it is Riemann integrable. In the former (stronger) proof, if f(x,t) is Riemann integrable, then so is fx(x,t) (and thus is obviously also Lebesgue integrable).

Let

[math]\displaystyle{ u(x) = \int_a^b f(x, t) \,dt. }[/math] |

|

() |

By the definition of the derivative,

[math]\displaystyle{ u'(x) = \lim_{h \to 0} \frac{u(x + h) - u(x)}{h}. }[/math] |

|

() |

Substitute equation (1) into equation (2). The difference of two integrals equals the integral of the difference, and 1/h is a constant, so [math]\displaystyle{ \begin{align} u'(x) &= \lim_{h \to 0} \frac{\int_a^bf(x + h, t)\,dt - \int_a^b f(x, t)\,dt}{h} \\ &= \lim_{h \to 0} \frac{\int_a^b\left( f(x + h, t) - f(x,t) \right)\,dt}{h} \\ &= \lim_{h \to 0} \int_a^b \frac{f(x + h, t) - f(x, t)}{h} \,dt. \end{align} }[/math]

We now show that the limit can be passed through the integral sign.

We claim that the passage of the limit under the integral sign is valid by the bounded convergence theorem (a corollary of the dominated convergence theorem). For each δ > 0, consider the difference quotient [math]\displaystyle{ f_\delta(x, t) = \frac{f(x + \delta, t) - f(x, t)}{\delta}. }[/math] For t fixed, the mean value theorem implies there exists z in the interval [x, x + δ] such that [math]\displaystyle{ f_\delta(x, t) = f_x(z, t). }[/math] Continuity of fx(x, t) and compactness of the domain together imply that fx(x, t) is bounded. The above application of the mean value theorem therefore gives a uniform (independent of [math]\displaystyle{ t }[/math]) bound on [math]\displaystyle{ f_\delta(x, t) }[/math]. The difference quotients converge pointwise to the partial derivative fx by the assumption that the partial derivative exists.

The above argument shows that for every sequence {δn} → 0, the sequence [math]\displaystyle{ \{f_{\delta_n}(x, t)\} }[/math] is uniformly bounded and converges pointwise to fx. The bounded convergence theorem states that if a sequence of functions on a set of finite measure is uniformly bounded and converges pointwise, then passage of the limit under the integral is valid. In particular, the limit and integral may be exchanged for every sequence {δn} → 0. Therefore, the limit as δ → 0 may be passed through the integral sign.

Variable limits form

For a continuous real valued function g of one real variable, and real valued differentiable functions [math]\displaystyle{ f_1 }[/math] and [math]\displaystyle{ f_2 }[/math] of one real variable, [math]\displaystyle{ \frac{d}{dx} \left( \int_{f_1(x)}^{f_2(x)} g(t) \,dt \right )= g\left(f_2(x)\right) {f_2'(x)} - g\left(f_1(x)\right) {f_1'(x)}. }[/math]

This follows from the chain rule and the First Fundamental Theorem of Calculus. Define [math]\displaystyle{ G(x) = \int_{f_1(x)}^{f_2(x)} g(t) \, dt, }[/math] and [math]\displaystyle{ \Gamma(x) = \int_{0}^{x} g(t) \, dt. }[/math] (The lower limit just has to be some number in the domain of [math]\displaystyle{ g }[/math])

Then, [math]\displaystyle{ G(x) }[/math] can be written as a composition: [math]\displaystyle{ G(x) = (\Gamma \circ f_2)(x) - (\Gamma \circ f_1)(x) }[/math]. The Chain Rule then implies that [math]\displaystyle{ G'(x) = \Gamma'\left(f_2(x)\right) f_2'(x) - \Gamma'\left(f_1(x)\right) f_1'(x). }[/math] By the First Fundamental Theorem of Calculus, [math]\displaystyle{ \Gamma'(x) = g(x) }[/math]. Therefore, substituting this result above, we get the desired equation: [math]\displaystyle{ G'(x) = g\left(f_2(x)\right) {f_2'(x)} - g\left(f_1(x)\right) {f_1'(x)}. }[/math]

Note: This form can be particularly useful if the expression to be differentiated is of the form: [math]\displaystyle{ \int_{f_1(x)}^{f_2(x)} h(x)g(t) \,dt }[/math] Because [math]\displaystyle{ h(x) }[/math] does not depend on the limits of integration, it may be moved out from under the integral sign, and the above form may be used with the Product rule, i.e., [math]\displaystyle{ \frac{d}{dx} \left( \int_{f_1(x)}^{f_2(x)} h(x)g(t) \,dt \right ) = \frac{d}{dx} \left(h(x) \int_{f_1(x)}^{f_2(x)} g(t) \,dt \right ) = h'(x)\int_{f_1(x)}^{f_2(x)} g(t) \,dt + h(x) \frac{d}{dx} \left(\int_{f_1(x)}^{f_2(x)} g(t) \,dt \right ) }[/math]

General form with variable limits

Set [math]\displaystyle{ \varphi(\alpha) = \int_a^b f(x,\alpha)\,dx, }[/math] where a and b are functions of α that exhibit increments Δa and Δb, respectively, when α is increased by Δα. Then, [math]\displaystyle{ \begin{align} \Delta\varphi &= \varphi(\alpha + \Delta\alpha) - \varphi(\alpha) \\[4pt] &= \int_{a + \Delta a}^{b + \Delta b}f(x, \alpha + \Delta\alpha)\,dx - \int_a^b f(x, \alpha)\,dx \\[4pt] &= \int_{a + \Delta a}^af(x, \alpha + \Delta\alpha)\,dx + \int_a^bf(x, \alpha + \Delta\alpha)\,dx + \int_b^{b + \Delta b} f(x, \alpha+\Delta\alpha)\,dx - \int_a^b f(x, \alpha)\,dx \\[4pt] &= -\int_a^{a + \Delta a} f(x, \alpha + \Delta\alpha)\,dx + \int_a^b [f(x, \alpha + \Delta\alpha) - f(x,\alpha)]\,dx + \int_b^{b + \Delta b} f(x, \alpha + \Delta\alpha)\,dx. \end{align} }[/math]

A form of the mean value theorem, [math]\displaystyle{ \int_a^b f(x)\,dx = (b - a)f(\xi) }[/math], where a < ξ < b, may be applied to the first and last integrals of the formula for Δφ above, resulting in [math]\displaystyle{ \Delta\varphi = -\Delta a f(\xi_1, \alpha + \Delta\alpha) + \int_a^b [f(x, \alpha + \Delta\alpha) - f(x,\alpha)]\,dx + \Delta b f(\xi_2, \alpha + \Delta\alpha). }[/math]

Divide by Δα and let Δα → 0. Notice ξ1 → a and ξ2 → b. We may pass the limit through the integral sign: [math]\displaystyle{ \lim_{\Delta\alpha\to 0}\int_a^b \frac{f(x,\alpha + \Delta\alpha) - f(x,\alpha)}{\Delta\alpha}\,dx = \int_a^b \frac{\partial}{\partial\alpha}f(x, \alpha)\,dx, }[/math] again by the bounded convergence theorem. This yields the general form of the Leibniz integral rule, [math]\displaystyle{ \frac{d\varphi}{d\alpha} = \int_a^b \frac{\partial}{\partial\alpha}f(x, \alpha)\,dx + f(b, \alpha) \frac{db}{d\alpha} - f(a, \alpha)\frac{da}{d\alpha}. }[/math]

Alternative proof of the general form with variable limits, using the chain rule

The general form of Leibniz's Integral Rule with variable limits can be derived as a consequence of the basic form of Leibniz's Integral Rule, the multivariable chain rule, and the First Fundamental Theorem of Calculus. Suppose [math]\displaystyle{ f }[/math] is defined in a rectangle in the [math]\displaystyle{ x-t }[/math] plane, for [math]\displaystyle{ x \in [x_1, x_2] }[/math] and [math]\displaystyle{ t \in [t_1, t_2] }[/math]. Also, assume [math]\displaystyle{ f }[/math] and the partial derivative [math]\displaystyle{ \frac{\partial f}{\partial x} }[/math] are both continuous functions on this rectangle. Suppose [math]\displaystyle{ a, b }[/math] are differentiable real valued functions defined on [math]\displaystyle{ [x_1, x_2] }[/math], with values in [math]\displaystyle{ [t_1, t_2] }[/math] (i.e. for every [math]\displaystyle{ x \in [x_1, x_2], a(x) , b(x) \in [t_1, t_2] }[/math]). Now, set [math]\displaystyle{ F(x,y) = \int_{t_1}^{y} f(x,t)\,dt , \qquad \text{for} ~ x \in [x_1, x_2] ~\text{and}~ y \in [t_1, t_2] }[/math] and [math]\displaystyle{ G(x) = \int_{a(x)}^{b(x)} f(x,t)\,dt , \quad \text{for} ~ x \in [x_1, x_2] }[/math]

Then, by properties of Definite Integrals, we can write [math]\displaystyle{ \begin{align} G(x) &= \int_{t_1}^{b(x)} f(x,t)\,dt - \int_{t_1}^{a(x)} f(x,t)\,dt \\[4pt] &= F(x, b(x)) - F(x, a(x)) \end{align} }[/math]

Since the functions [math]\displaystyle{ F, a, b }[/math] are all differentiable (see the remark at the end of the proof), by the Multivariable Chain Rule, it follows that [math]\displaystyle{ G }[/math] is differentiable, and its derivative is given by the formula: [math]\displaystyle{ G'(x) = \left(\frac{\partial F}{\partial x} (x, b(x)) + \frac{\partial F}{\partial y} (x, b(x) ) b'(x) \right) - \left(\frac{\partial F}{\partial x} (x, a(x)) + \frac{\partial F}{\partial y} (x, a(x)) a'(x) \right) }[/math]

Now, note that for every [math]\displaystyle{ x \in [x_1, x_2] }[/math], and for every [math]\displaystyle{ y \in [t_1, t_2] }[/math], we have that [math]\displaystyle{ \frac{\partial F}{\partial x}(x, y) = \int_{t_1}^y \frac{\partial f}{\partial x}(x,t) \, dt }[/math], because when taking the partial derivative with respect to [math]\displaystyle{ x }[/math] of [math]\displaystyle{ F }[/math], we are keeping [math]\displaystyle{ y }[/math] fixed in the expression [math]\displaystyle{ \int_{t_1}^{y} f(x,t)\,dt }[/math]; thus the basic form of Leibniz's Integral Rule with constant limits of integration applies. Next, by the First Fundamental Theorem of Calculus, we have that [math]\displaystyle{ \frac{\partial F}{\partial y}(x, y) = f(x,y) }[/math]; because when taking the partial derivative with respect to [math]\displaystyle{ y }[/math] of [math]\displaystyle{ F }[/math], the first variable [math]\displaystyle{ x }[/math] is fixed, so the fundamental theorem can indeed be applied.

Substituting these results into the equation for [math]\displaystyle{ G'(x) }[/math] above gives: [math]\displaystyle{ \begin{align} G'(x) &= \left(\int_{t_1}^{b(x)} \frac{\partial f}{\partial x}(x,t) \, dt + f(x, b(x)) b'(x) \right) - \left(\int_{t_1}^{a(x)} \dfrac{\partial f}{\partial x}(x,t) \, dt + f(x, a(x)) a'(x) \right) \\[2pt] &= f(x,b(x)) b'(x) - f(x,a(x)) a'(x) + \int_{a(x)}^{b(x)} \frac{\partial f}{\partial x}(x,t) \, dt, \end{align} }[/math] as desired.

There is a technical point in the proof above which is worth noting: applying the Chain Rule to [math]\displaystyle{ G }[/math] requires that [math]\displaystyle{ F }[/math] already be differentiable. This is where we use our assumptions about [math]\displaystyle{ f }[/math]. As mentioned above, the partial derivatives of [math]\displaystyle{ F }[/math] are given by the formulas [math]\displaystyle{ \frac{\partial F}{\partial x}(x, y) = \int_{t_1}^y \frac{\partial f}{\partial x}(x,t) \, dt }[/math] and [math]\displaystyle{ \frac{\partial F}{\partial y}(x, y) = f(x,y) }[/math]. Since [math]\displaystyle{ \dfrac{\partial f}{\partial x} }[/math] is continuous, its integral is also a continuous function,[7] and since [math]\displaystyle{ f }[/math] is also continuous, these two results show that both the partial derivatives of [math]\displaystyle{ F }[/math] are continuous. Since continuity of partial derivatives implies differentiability of the function,[8] [math]\displaystyle{ F }[/math] is indeed differentiable.

Three-dimensional, time-dependent form

At time t the surface Σ in Figure 1 contains a set of points arranged about a centroid [math]\displaystyle{ \mathbf{C}(t) }[/math]. The function [math]\displaystyle{ \mathbf{F}(\mathbf{r}, t) }[/math] can be written as [math]\displaystyle{ \mathbf{F}(\mathbf{C}(t) + \mathbf{r} - \mathbf{C}(t), t) = \mathbf{F}(\mathbf{C}(t) + \mathbf{I}, t), }[/math] with [math]\displaystyle{ \mathbf{I} }[/math] independent of time. Variables are shifted to a new frame of reference attached to the moving surface, with origin at [math]\displaystyle{ \mathbf{C}(t) }[/math]. For a rigidly translating surface, the limits of integration are then independent of time, so: [math]\displaystyle{ \frac {d}{dt} \left (\iint_{\Sigma (t)} d \mathbf{A}_{\mathbf{r}}\cdot \mathbf{F}(\mathbf{r}, t) \right) = \iint_\Sigma d \mathbf{A}_{\mathbf{I}} \cdot \frac {d}{dt}\mathbf{F}(\mathbf{C}(t) + \mathbf{I}, t), }[/math] where the limits of integration confining the integral to the region Σ no longer are time dependent so differentiation passes through the integration to act on the integrand only: [math]\displaystyle{ \frac {d}{dt}\mathbf{F}( \mathbf{C}(t) + \mathbf{I}, t) = \mathbf{F}_t(\mathbf{C}(t) + \mathbf{I}, t) + \mathbf{v \cdot \nabla F}(\mathbf{C}(t) + \mathbf{I}, t) = \mathbf{F}_t(\mathbf{r}, t) + \mathbf{v} \cdot \nabla \mathbf{F}(\mathbf{r}, t), }[/math] with the velocity of motion of the surface defined by [math]\displaystyle{ \mathbf{v} = \frac {d}{dt} \mathbf{C} (t). }[/math]

This equation expresses the material derivative of the field, that is, the derivative with respect to a coordinate system attached to the moving surface. Having found the derivative, variables can be switched back to the original frame of reference. We notice that (see article on curl) [math]\displaystyle{ \nabla \times \left(\mathbf{v} \times \mathbf{F}\right) = (\nabla \cdot \mathbf{F} + \mathbf{F} \cdot \nabla) \mathbf{v}- (\nabla \cdot \mathbf{v} + \mathbf{v} \cdot \nabla) \mathbf{F}, }[/math] and that Stokes theorem equates the surface integral of the curl over Σ with a line integral over ∂Σ: [math]\displaystyle{ \frac{d}{dt} \left(\iint_{\Sigma (t)} \mathbf{F} (\mathbf{r}, t) \cdot d \mathbf{A}\right) = \iint_{\Sigma (t)} \big(\mathbf{F}_t (\mathbf{r}, t) + \left(\mathbf{F \cdot \nabla} \right)\mathbf{v} + \left(\nabla \cdot \mathbf{F} \right) \mathbf{v} - (\nabla \cdot \mathbf{v})\mathbf{F}\big)\cdot d\mathbf{A} - \oint_{\partial \Sigma (t)}\left(\mathbf{v} \times \mathbf{F}\right)\cdot d\mathbf{s}. }[/math]

The sign of the line integral is based on the right-hand rule for the choice of direction of line element ds. To establish this sign, for example, suppose the field F points in the positive z-direction, and the surface Σ is a portion of the xy-plane with perimeter ∂Σ. We adopt the normal to Σ to be in the positive z-direction. Positive traversal of ∂Σ is then counterclockwise (right-hand rule with thumb along z-axis). Then the integral on the left-hand side determines a positive flux of F through Σ. Suppose Σ translates in the positive x-direction at velocity v. An element of the boundary of Σ parallel to the y-axis, say ds, sweeps out an area vt × ds in time t. If we integrate around the boundary ∂Σ in a counterclockwise sense, vt × ds points in the negative z-direction on the left side of ∂Σ (where ds points downward), and in the positive z-direction on the right side of ∂Σ (where ds points upward), which makes sense because Σ is moving to the right, adding area on the right and losing it on the left. On that basis, the flux of F is increasing on the right of ∂Σ and decreasing on the left. However, the dot product v × F ⋅ ds = −F × v ⋅ ds = −F ⋅ v × ds. Consequently, the sign of the line integral is taken as negative.

If v is a constant, [math]\displaystyle{ \frac {d}{dt} \iint_{\Sigma (t)} \mathbf{F} (\mathbf{r}, t) \cdot d \mathbf{A} = \iint_{\Sigma (t)} \big(\mathbf{F}_t (\mathbf{r}, t) + \left(\nabla \cdot \mathbf{F} \right) \mathbf{v}\big) \cdot d \mathbf{A} - \oint_{\partial \Sigma (t)}\left(\mathbf{v} \times \mathbf{F}\right) \cdot \,d\mathbf{s}, }[/math] which is the quoted result. This proof does not consider the possibility of the surface deforming as it moves.

Alternative derivation

Lemma. One has: [math]\displaystyle{ \frac{\partial}{\partial b} \left (\int_a^b f(x) \,dx \right ) = f(b), \qquad \frac{\partial}{\partial a} \left (\int_a^b f(x) \,dx \right )= -f(a). }[/math]

Proof. From the proof of the fundamental theorem of calculus,

[math]\displaystyle{ \begin{align} \frac{\partial}{\partial b} \left (\int_a^b f(x) \,dx \right ) &= \lim_{\Delta b \to 0} \frac{1}{\Delta b} \left[ \int_a^{b+\Delta b} f(x)\,dx - \int_a^b f(x)\,dx \right] \\[6pt] &= \lim_{\Delta b \to 0} \frac{1}{\Delta b} \int_b^{b+\Delta b} f(x)\,dx \\[6pt] &= \lim_{\Delta b \to 0} \frac{1}{\Delta b} \left[ f(b) \Delta b + O\left(\Delta b^2\right) \right] \\[6pt] &= f(b), \end{align} }[/math] and [math]\displaystyle{ \begin{align} \frac{\partial}{\partial a} \left (\int_a^b f(x) \,dx \right )&= \lim_{\Delta a \to 0} \frac{1}{\Delta a} \left[ \int_{a+\Delta a}^b f(x)\,dx - \int_a^b f(x)\,dx \right] \\[6pt] &= \lim_{\Delta a \to 0} \frac{1}{\Delta a} \int_{a+\Delta a}^a f(x)\,dx \\[6pt] &= \lim_{\Delta a \to 0} \frac{1}{\Delta a} \left[ -f(a) \Delta a + O\left(\Delta a^2\right) \right]\\[6pt] &= -f(a). \end{align} }[/math]

Suppose a and b are constant, and that f(x) involves a parameter α which is constant in the integration but may vary to form different integrals. Assume that f(x, α) is a continuous function of x and α in the compact set {(x, α) : α0 ≤ α ≤ α1 and a ≤ x ≤ b}, and that the partial derivative fα(x, α) exists and is continuous. If one defines: [math]\displaystyle{ \varphi(\alpha) = \int_a^b f(x,\alpha)\,dx, }[/math] then [math]\displaystyle{ \varphi }[/math] may be differentiated with respect to α by differentiating under the integral sign, i.e., [math]\displaystyle{ \frac{d\varphi}{d\alpha}=\int_a^b\frac{\partial}{\partial\alpha}f(x,\alpha)\,dx. }[/math]

By the Heine–Cantor theorem it is uniformly continuous in that set. In other words, for any ε > 0 there exists Δα such that for all values of x in [a, b], [math]\displaystyle{ |f(x,\alpha+\Delta \alpha)-f(x,\alpha)|\lt \varepsilon. }[/math]

On the other hand, [math]\displaystyle{ \begin{align} \Delta\varphi &=\varphi(\alpha+\Delta \alpha)-\varphi(\alpha) \\[6pt] &=\int_a^b f(x,\alpha+\Delta\alpha)\,dx - \int_a^b f(x,\alpha)\, dx \\[6pt] &=\int_a^b \left (f(x,\alpha+\Delta\alpha)-f(x,\alpha) \right )\,dx \\[6pt] &\leq \varepsilon (b-a). \end{align} }[/math]

Hence φ(α) is a continuous function.

Similarly if [math]\displaystyle{ \frac{\partial}{\partial\alpha} f(x,\alpha) }[/math] exists and is continuous, then for all ε > 0 there exists Δα such that: [math]\displaystyle{ \forall x \in [a, b], \quad \left|\frac{f(x,\alpha+\Delta \alpha)-f(x,\alpha)}{\Delta \alpha} - \frac{\partial f}{\partial\alpha}\right|\lt \varepsilon. }[/math]

Therefore, [math]\displaystyle{ \frac{\Delta \varphi}{\Delta \alpha}=\int_a^b\frac{f(x,\alpha+\Delta\alpha)-f(x,\alpha)}{\Delta \alpha}\,dx = \int_a^b \frac{\partial f(x,\alpha)}{\partial \alpha}\,dx + R, }[/math] where [math]\displaystyle{ |R| \lt \int_a^b \varepsilon\, dx = \varepsilon(b-a). }[/math]

Now, ε → 0 as Δα → 0, so [math]\displaystyle{ \lim_{{\Delta \alpha} \to 0}\frac{\Delta\varphi}{\Delta \alpha}= \frac{d\varphi}{d\alpha} = \int_a^b \frac{\partial}{\partial \alpha} f(x,\alpha)\,dx. }[/math]

This is the formula we set out to prove.

Now, suppose [math]\displaystyle{ \int_a^b f(x,\alpha)\,dx=\varphi(\alpha), }[/math] where a and b are functions of α which take increments Δa and Δb, respectively, when α is increased by Δα. Then, [math]\displaystyle{ \begin{align} \Delta\varphi &=\varphi(\alpha+\Delta\alpha)-\varphi(\alpha) \\[6pt] &=\int_{a+\Delta a}^{b+\Delta b}f(x,\alpha+\Delta\alpha)\,dx -\int_a^b f(x,\alpha)\,dx \\[6pt] &=\int_{a+\Delta a}^af(x,\alpha+\Delta\alpha)\,dx+\int_a^bf(x,\alpha+\Delta\alpha)\,dx+\int_b^{b+\Delta b}f(x,\alpha+\Delta\alpha)\,dx -\int_a^b f(x,\alpha)\,dx \\[6pt] &=-\int_a^{a+\Delta a} f(x,\alpha+\Delta\alpha)\,dx+\int_a^b[f(x,\alpha+\Delta\alpha)-f(x,\alpha)]\,dx+\int_b^{b+\Delta b} f(x,\alpha+\Delta\alpha)\,dx. \end{align} }[/math]

A form of the mean value theorem, [math]\displaystyle{ \int_a^b f(x)\,dx=(b-a)f(\xi), }[/math] where a < ξ < b, can be applied to the first and last integrals of the formula for Δφ above, resulting in [math]\displaystyle{ \Delta\varphi=-\Delta a\,f(\xi_1,\alpha+\Delta\alpha)+\int_a^b[f(x,\alpha+\Delta\alpha)-f(x,\alpha)]\,dx+\Delta b\,f(\xi_2,\alpha+\Delta\alpha). }[/math]

Dividing by Δα, letting Δα → 0, noticing ξ1 → a and ξ2 → b and using the above derivation for [math]\displaystyle{ \frac{d\varphi}{d\alpha} = \int_a^b\frac{\partial}{\partial \alpha} f(x,\alpha)\,dx }[/math] yields [math]\displaystyle{ \frac{d\varphi}{d\alpha} = \int_a^b\frac{\partial}{\partial \alpha} f(x,\alpha)\,dx+f(b,\alpha)\frac{\partial b}{\partial \alpha}-f(a,\alpha)\frac{\partial a}{\partial \alpha}. }[/math]

This is the general form of the Leibniz integral rule.

Examples

Example 1: Fixed limits

Consider the function [math]\displaystyle{ \varphi(\alpha)=\int_0^1\frac{\alpha}{x^2+\alpha^2}\,dx. }[/math]

The function under the integral sign is not continuous at the point (x, α) = (0, 0), and the function φ(α) has a discontinuity at α = 0 because φ(α) approaches ±π/2 as α → 0±.

If we differentiate φ(α) with respect to α under the integral sign, we get [math]\displaystyle{ \frac{d}{d\alpha} \varphi(\alpha)=\int_0^1\frac{\partial}{\partial\alpha}\left(\frac{\alpha}{x^2+\alpha^2}\right)\,dx=\int_0^1\frac{x^2-\alpha^2}{(x^2+\alpha^2)^2} dx=\left.-\frac{x}{x^2+\alpha^2}\right|_0^1=-\frac{1}{1+\alpha^2}, }[/math] which is, of course, true for all values of α except α = 0. This may be integrated (with respect to α) to find [math]\displaystyle{ \varphi(\alpha) = \begin{cases} 0, & \alpha = 0, \\ -\arctan({\alpha})+\frac{\pi}{2}, & \alpha \neq 0. \end{cases} }[/math]

Example 2: Variable limits

An example with variable limits: [math]\displaystyle{ \begin{align} \frac{d}{dx} \int_{\sin x}^{\cos x} \cosh t^2\,dt &= \cosh\left(\cos^2 x\right) \frac{d}{dx}(\cos x) - \cosh\left(\sin^2 x\right) \frac{d}{dx} (\sin x) + \int_{\sin x}^{\cos x} \frac{\partial}{\partial x} (\cosh t^2) \, dt \\[6pt] &= \cosh(\cos^2 x) (-\sin x) - \cosh(\sin^2 x) (\cos x) + 0 \\[6pt] &= - \cosh(\cos^2 x) \sin x - \cosh(\sin^2 x) \cos x. \end{align} }[/math]

Applications

Evaluating definite integrals

The formula [math]\displaystyle{ \frac{d}{dx} \left (\int_{a(x)}^{b(x)}f(x,t) \, dt \right) = f\big(x,b(x)\big)\cdot \frac{d}{dx} b(x) - f\big(x,a(x)\big)\cdot \frac{d}{dx} a(x) + \int_{a(x)}^{b(x)}\frac{\partial}{\partial x} f(x,t) \, dt }[/math] can be of use when evaluating certain definite integrals. When used in this context, the Leibniz integral rule for differentiating under the integral sign is also known as Feynman's trick for integration.

Example 3

Consider [math]\displaystyle{ \varphi(\alpha)=\int_0^\pi \ln \left (1-2\alpha\cos(x)+\alpha^2 \right )\,dx, \qquad |\alpha| \neq 1. }[/math]

Now, [math]\displaystyle{ \begin{align} \frac{d}{d\alpha} \varphi(\alpha) &=\int_0^\pi \frac{-2\cos(x)+2\alpha }{1-2\alpha \cos(x)+\alpha^2} dx \\[6pt] &=\frac{1}{\alpha}\int_0^\pi \left(1-\frac{1-\alpha^2}{1-2\alpha \cos(x)+\alpha^2} \right) dx \\[6pt] &=\left. \frac{\pi}{\alpha}-\frac{2}{\alpha}\left\{\arctan\left(\frac{1+\alpha}{1-\alpha} \tan\left(\frac{x}{2}\right)\right) \right\} \right|_0^\pi. \end{align} }[/math]

As [math]\displaystyle{ x }[/math] varies from [math]\displaystyle{ 0 }[/math] to [math]\displaystyle{ \pi }[/math], we have [math]\displaystyle{ \begin{cases} \frac{1+\alpha}{1-\alpha} \tan\left(\frac{x}{2}\right) \geq 0, & |\alpha| \lt 1, \\ \frac{1+\alpha}{1-\alpha} \tan \left( \frac{x}{2}\right) \leq 0, & |\alpha| \gt 1. \end{cases} }[/math]

Hence, [math]\displaystyle{ \left. \arctan\left(\frac{1+\alpha}{1-\alpha}\tan\left(\frac{x}{2}\right)\right)\right|_0^\pi= \begin{cases} \frac{\pi}{2}, & |\alpha| \lt 1, \\ -\frac{\pi}{2}, & |\alpha| \gt 1. \end{cases} }[/math]

Therefore,

[math]\displaystyle{ \frac{d}{d\alpha} \varphi(\alpha)= \begin{cases} 0, & |\alpha| \lt 1, \\ \frac{2\pi}{\alpha}, & |\alpha| \gt 1. \end{cases} }[/math]

Integrating both sides with respect to [math]\displaystyle{ \alpha }[/math], we get: [math]\displaystyle{ \varphi (\alpha) = \begin{cases} C_1, & |\alpha| \lt 1, \\ 2\pi \ln |\alpha| + C_2, & |\alpha| \gt 1. \end{cases} }[/math]

[math]\displaystyle{ C_{1} = 0 }[/math] follows from evaluating [math]\displaystyle{ \varphi (0) }[/math]: [math]\displaystyle{ \varphi(0) =\int_0^\pi \ln(1)\,dx =\int_0^\pi 0\,dx=0. }[/math]

To determine [math]\displaystyle{ C_2 }[/math] in the same manner, we should need to substitute in a value of [math]\displaystyle{ \alpha }[/math] greater than 1 in [math]\displaystyle{ \varphi (\alpha) }[/math]. This is somewhat inconvenient. Instead, we substitute [math]\displaystyle{ \alpha = \frac{1}{\beta} }[/math], where [math]\displaystyle{ |\beta| \lt 1 }[/math]. Then, [math]\displaystyle{ \begin{align} \varphi(\alpha) &=\int_0^\pi\left(\ln \left (1-2\beta \cos(x)+\beta^2 \right )-2\ln|\beta|\right) dx \\[6pt] &= \int_0^\pi \ln \left (1-2\beta \cos(x)+\beta^2 \right )\,dx -\int_0^\pi 2\ln|\beta| dx \\[6pt] &=0-2\pi\ln|\beta| \\[6pt] &=2\pi\ln|\alpha|. \end{align} }[/math]

Therefore, [math]\displaystyle{ C_{2} = 0 }[/math]

The definition of [math]\displaystyle{ \varphi (\alpha) }[/math] is now complete: [math]\displaystyle{ \varphi (\alpha) = \begin{cases} 0, & |\alpha| \lt 1, \\ 2\pi \ln |\alpha|, & |\alpha| \gt 1. \end{cases} }[/math]

The foregoing discussion, of course, does not apply when [math]\displaystyle{ \alpha = \pm 1 }[/math], since the conditions for differentiability are not met.

Example 4

[math]\displaystyle{ \mathbf I = \int_0^{\pi/2} \frac{1}{(a\cos^2 x +b\sin^2 x)^2}\,dx,\qquad a,b \gt 0. }[/math]

First we calculate: [math]\displaystyle{ \begin{align} \mathbf{J} &= \int_0^{\pi/2} \frac{1}{a\cos^2 x + b \sin^2 x} dx \\[6pt] &= \int_0^{\pi/2} \frac{\frac{1}{\cos^2 x}}{a + b \frac{\sin^2 x}{\cos^2 x}} dx \\[6pt] &= \int_0^{\pi/2} \frac{\sec^2 x}{a +b \tan^2 x} dx \\[6pt] &= \frac{1}{b} \int_0^{\pi/2} \frac{1}{\left(\sqrt{\frac{a}{b}}\right)^2+\tan^2 x}\,d(\tan x) \\[6pt] &= \left.\frac{1}{\sqrt{ab}}\arctan \left(\sqrt{\frac{b}{a}}\tan x\right) \right|_0^{\pi/2} \\[6pt] &= \frac{\pi}{2\sqrt{ab}}. \end{align} }[/math]

The limits of integration being independent of [math]\displaystyle{ a }[/math], we have: [math]\displaystyle{ \frac{\partial \mathbf J}{\partial a}=-\int_0^{\pi/2} \frac{\cos^2 x}{\left(a\cos^2 x+b \sin^2 x\right)^2}\,dx }[/math]

On the other hand: [math]\displaystyle{ \frac{\partial \mathbf J}{\partial a}= \frac{\partial}{\partial a} \left(\frac{\pi}{2\sqrt{ab}}\right) =-\frac{\pi}{4\sqrt{a^3b}}. }[/math]

Equating these two relations then yields [math]\displaystyle{ \int_0^{\pi/2} \frac{\cos^2 x}{\left(a \cos^2 x+b \sin^2 x\right)^2}\,dx=\frac{\pi}{4\sqrt{a^3b}}. }[/math]

In a similar fashion, pursuing [math]\displaystyle{ \frac{\partial \mathbf J}{\partial b} }[/math] yields [math]\displaystyle{ \int_0^{\pi/2}\frac{\sin^2 x}{\left(a\cos^2 x+b\sin^2 x\right)^2}\,dx = \frac{\pi}{4\sqrt{ab^3}}. }[/math]

Adding the two results then produces [math]\displaystyle{ \mathbf I = \int_0^{\pi/2}\frac{1}{\left(a\cos^2x+b\sin^2x\right)^2}\,dx=\frac{\pi}{4\sqrt{ab}}\left(\frac{1}{a}+\frac{1}{b}\right), }[/math] which computes [math]\displaystyle{ \mathbf I }[/math] as desired.

This derivation may be generalized. Note that if we define [math]\displaystyle{ \mathbf I_n = \int_0^{\pi/2} \frac{1}{\left(a\cos^2 x+b\sin^2 x\right)^n}\,dx, }[/math] it can easily be shown that [math]\displaystyle{ (1-n)\mathbf I_n = \frac{\partial\mathbf I_{n-1}}{\partial a} + \frac{\partial \mathbf I_{n-1}}{\partial b} }[/math]

Given [math]\displaystyle{ \mathbf{I}_1 }[/math], this integral reduction formula can be used to compute all of the values of [math]\displaystyle{ \mathbf{I}_n }[/math] for [math]\displaystyle{ n \gt 1 }[/math]. Integrals like [math]\displaystyle{ \mathbf{I} }[/math] and [math]\displaystyle{ \mathbf{J} }[/math] may also be handled using the Weierstrass substitution.

Example 5

Here, we consider the integral [math]\displaystyle{ \mathbf I(\alpha)=\int_0^{\pi/2} \frac{\ln (1+\cos\alpha \cos x)}{\cos x}\,dx, \qquad 0 \lt \alpha \lt \pi. }[/math]

Differentiating under the integral with respect to [math]\displaystyle{ \alpha }[/math], we have [math]\displaystyle{ \begin{align} \frac{d}{d\alpha} \mathbf{I}(\alpha) &= \int_0^{\pi/2} \frac{\partial}{\partial\alpha} \left(\frac{\ln(1 + \cos\alpha \cos x)}{\cos x}\right) \, dx \\[6pt] &=-\int_0^{\pi/2}\frac{\sin \alpha}{1+\cos \alpha \cos x}\,dx \\ &=-\int_0^{\pi/2}\frac{\sin \alpha}{\left(\cos^2 \frac{x}{2}+\sin^2 \frac{x}{2}\right)+\cos \alpha \left(\cos^2 \frac{x}{2}-\sin^2 \frac{x}{2}\right)} \, dx \\[6pt] &=-\frac{\sin\alpha}{1-\cos\alpha} \int_0^{\pi/2} \frac{1}{\cos^2\frac{x}{2}} \frac{1}{\frac{1+\cos \alpha}{1-\cos \alpha} +\tan^2 \frac{x}{2}} \, dx \\[6pt] &=-\frac{2\sin\alpha}{1-\cos\alpha} \int_0^{\pi/2} \frac{\frac{1}{2} \sec^2 \frac{x}{2}}{\frac{2 \cos^2 \frac{\alpha}{2}}{2 \sin^2\frac{\alpha}{2}} + \tan^2 \frac{x}{2}} \, dx \\[6pt] &=-\frac{2\left(2 \sin \frac{\alpha}{2} \cos \frac{\alpha}{2}\right)}{2 \sin^2 \frac{\alpha}{2}} \int_0^{\pi/2} \frac{1}{\cot^2\frac{\alpha}{2} + \tan^2 \frac{x}{2}} \, d\left(\tan \frac{x}{2}\right)\\[6pt] &=-2\cot \frac{\alpha}{2}\int_0^{\pi/2} \frac{1}{\cot^2\frac{\alpha}{2} + \tan^2\frac{x}{2}}\,d\left(\tan \frac{x}{2}\right)\\[6pt] &=-2\arctan \left(\tan \frac{\alpha}{2} \tan \frac{x}{2} \right) \bigg|_0^{\pi/2}\\[6pt] &=-\alpha. \end{align} }[/math]

Therefore: [math]\displaystyle{ \mathbf{I}(\alpha) = C - \frac{\alpha^2}{2}. }[/math]

But [math]\displaystyle{ \mathbf{I} \left(\frac{\pi}{2} \right) = 0 }[/math] by definition so [math]\displaystyle{ C = \frac{\pi^2}{8} }[/math] and [math]\displaystyle{ \mathbf I(\alpha) = \frac{\pi^2}{8}-\frac{\alpha^2}{2}. }[/math]

Example 6

Here, we consider the integral [math]\displaystyle{ \int_0^{2\pi} e^{\cos\theta} \cos(\sin\theta) \, d\theta. }[/math]

We introduce a new variable φ and rewrite the integral as [math]\displaystyle{ f(\varphi) = \int_0^{2\pi} e^{\varphi\cos\theta} \cos(\varphi\sin\theta)\,d\theta. }[/math]

When φ = 1 this equals the original integral. However, this more general integral may be differentiated with respect to [math]\displaystyle{ \varphi }[/math]: [math]\displaystyle{ \begin{align} \frac{df}{d\varphi} &= \int_0^{2\pi} \frac{\partial}{\partial\varphi}\left(e^{\varphi\cos\theta} \cos(\varphi\sin\theta)\right)\,d\theta \\[6pt] &= \int_0^{2\pi} e^{\varphi\cos\theta} \left( \cos\theta\cos(\varphi\sin\theta)-\sin\theta\sin(\varphi\sin\theta) \right)\,d\theta. \end{align} }[/math]

Now, fix φ, and consider the vector field on [math]\displaystyle{ \mathbf{R}^2 }[/math] defined by [math]\displaystyle{ \mathbf{F}(x,y) = (F_1(x,y), F_2(x,y)) := (e^{\varphi x} \sin (\varphi y), e^{\varphi x} \cos (\varphi y)) }[/math]. Further, choose the positive oriented parametrization of the unit circle [math]\displaystyle{ S^1 }[/math] given by [math]\displaystyle{ \mathbf{r} \colon [0, 2\pi) \to \mathbf{R}^2 }[/math], [math]\displaystyle{ \mathbf{r}(\theta) := (\cos \theta, \sin \theta) }[/math], so that [math]\displaystyle{ \mathbf{r}'(t) = (-\sin \theta, \cos \theta) }[/math]. Then the final integral above is precisely [math]\displaystyle{ \begin{align} & \int_0^{2\pi} e^{\varphi\cos\theta} \left( \cos\theta\cos(\varphi\sin\theta)-\sin\theta\sin(\varphi\sin\theta) \right)\,d\theta \\[6pt] = {} & \int_0^{2\pi} (e^{\varphi \cos \theta} \sin (\varphi \sin \theta), e^{\varphi \cos \theta} \cos (\varphi \sin \theta)) \cdot (-\sin \theta, \cos \theta) \, d\theta\\[6pt] = {} & \int_0^{2\pi} \mathbf{F}(\mathbf{r}(\theta)) \cdot \mathbf{r}'(\theta) \, d\theta\\[6pt] = {} & \oint_{S^1} \mathbf{F}(\mathbf{r}) \cdot d\mathbf{r} = \oint_{S^1} F_1 \, dx + F_2 \, dy, \end{align} }[/math] the line integral of [math]\displaystyle{ \mathbf{F} }[/math] over [math]\displaystyle{ S^1 }[/math]. By Green's Theorem, this equals the double integral [math]\displaystyle{ \iint_D \frac{\partial F_2}{\partial x} - \frac{\partial F_1}{\partial y} \, dA, }[/math] where [math]\displaystyle{ D }[/math] is the closed unit disc. Its integrand is identically 0, so [math]\displaystyle{ df/d\varphi }[/math] is likewise identically zero. This implies that f(φ) is constant. The constant may be determined by evaluating [math]\displaystyle{ f }[/math] at [math]\displaystyle{ \varphi = 0 }[/math]: [math]\displaystyle{ f(0) = \int_0^{2\pi} 1\,d\theta = 2\pi. }[/math]

Therefore, the original integral also equals [math]\displaystyle{ 2\pi }[/math].

Other problems to solve

There are innumerable other integrals that can be solved using the technique of differentiation under the integral sign. For example, in each of the following cases, the original integral may be replaced by a similar integral having a new parameter [math]\displaystyle{ \alpha }[/math]: [math]\displaystyle{ \begin{align} \int_0^\infty \frac{\sin x}{x}\,dx &\to \int_0^\infty e^{-\alpha x} \frac{\sin x}{x} dx,\\[6pt] \int_0^{\pi/2} \frac{x}{\tan x}\,dx &\to\int_0^{\pi/2} \frac{\tan^{-1}(\alpha \tan x)}{\tan x} dx,\\[6pt] \int_0^\infty \frac{\ln (1+x^2)}{1+x^2}\,dx &\to\int_0^\infty \frac{\ln (1+\alpha^2 x^2)}{1+x^2} dx \\[6pt] \int_0^1 \frac{x-1}{\ln x}\,dx &\to \int_0^1 \frac{x^\alpha-1}{\ln x} dx. \end{align} }[/math]

The first integral, the Dirichlet integral, is absolutely convergent for positive α but only conditionally convergent when [math]\displaystyle{ \alpha = 0 }[/math]. Therefore, differentiation under the integral sign is easy to justify when [math]\displaystyle{ \alpha \gt 0 }[/math], but proving that the resulting formula remains valid when [math]\displaystyle{ \alpha = 0 }[/math] requires some careful work.

Infinite series

The measure-theoretic version of differentiation under the integral sign also applies to summation (finite or infinite) by interpreting summation as counting measure. An example of an application is the fact that power series are differentiable in their radius of convergence.[citation needed]

Euler-Lagrange equations

The Leibniz integral rule is used in the derivation of the Euler-Lagrange equation in variational calculus.

In popular culture

Differentiation under the integral sign is mentioned in the late physicist Richard Feynman's best-selling memoir Surely You're Joking, Mr. Feynman! in the chapter "A Different Box of Tools". He describes learning it, while in high school, from an old text, Advanced Calculus (1926), by Frederick S. Woods (who was a professor of mathematics in the Massachusetts Institute of Technology). The technique was not often taught when Feynman later received his formal education in calculus, but using this technique, Feynman was able to solve otherwise difficult integration problems upon his arrival at graduate school at Princeton University:

One thing I never did learn was contour integration. I had learned to do integrals by various methods shown in a book that my high school physics teacher Mr. Bader had given me. One day he told me to stay after class. "Feynman," he said, "you talk too much and you make too much noise. I know why. You're bored. So I'm going to give you a book. You go up there in the back, in the corner, and study this book, and when you know everything that's in this book, you can talk again." So every physics class, I paid no attention to what was going on with Pascal's Law, or whatever they were doing. I was up in the back with this book: "Advanced Calculus", by Woods. Bader knew I had studied "Calculus for the Practical Man" a little bit, so he gave me the real works—it was for a junior or senior course in college. It had Fourier series, Bessel functions, determinants, elliptic functions—all kinds of wonderful stuff that I didn't know anything about. That book also showed how to differentiate parameters under the integral sign—it's a certain operation. It turns out that's not taught very much in the universities; they don't emphasize it. But I caught on how to use that method, and I used that one damn tool again and again. So because I was self-taught using that book, I had peculiar methods of doing integrals. The result was, when guys at MIT or Princeton had trouble doing a certain integral, it was because they couldn't do it with the standard methods they had learned in school. If it was contour integration, they would have found it; if it was a simple series expansion, they would have found it. Then I come along and try differentiating under the integral sign, and often it worked. So I got a great reputation for doing integrals, only because my box of tools was different from everybody else's, and they had tried all their tools on it before giving the problem to me.

See also

- Chain rule

- Differentiation of integrals

- Leibniz rule (generalized product rule)

- Reynolds transport theorem, a generalization of Leibniz rule

References

- ↑ Protter, Murray H.; Morrey, Charles B. Jr. (1985). "Differentiation under the Integral Sign". Intermediate Calculus (Second ed.). New York: Springer. pp. 421–426. doi:10.1007/978-1-4612-1086-3. ISBN 978-0-387-96058-6. https://books.google.com/books?id=3lTmBwAAQBAJ&pg=PA421.

- ↑ 2.0 2.1 Talvila, Erik (June 2001). "Necessary and Sufficient Conditions for Differentiating under the Integral Sign". American Mathematical Monthly 108 (6): 544–548. doi:10.2307/2695709. https://www.jstor.org/stable/2695709. Retrieved 16 April 2022.

- ↑ Abraham, Max; Becker, Richard (1950). Classical Theory of Electricity and Magnetism (2nd ed.). London: Blackie & Sons. pp. 39–40.

- ↑ 4.0 4.1 Flanders, Harly (June–July 1973). "Differentiation under the integral sign". American Mathematical Monthly 80 (6): 615–627. doi:10.2307/2319163. http://sgpwe.izt.uam.mx/files/users/uami/jdf/proyectos/Derivar_inetegral.pdf.

- ↑ Folland, Gerald (1999). Real Analysis: Modern Techniques and their Applications (2nd ed.). New York: John Wiley & Sons. p. 56. ISBN 978-0-471-31716-6.

- ↑ Cheng, Steve (6 September 2010). Differentiation under the integral sign with weak derivatives (Report). CiteSeerX.

- ↑ Spivak, Michael (1994). Calculus (3 ed.). Houston, Texas: Publish or Perish, Inc. pp. 267–268. ISBN 978-0-914098-89-8. https://archive.org/details/calculus00spiv_191.

- ↑ Spivak, Michael (1965). Calculus on Manifolds. Addison-Wesley Publishing Company. p. 31. ISBN 978-0-8053-9021-6.

Further reading

- Amazigo, John C.; Rubenfeld, Lester A. (1980). "Single Integrals: Leibnitz's Rule; Numerical Integration". Advanced Calculus and its Applications to the Engineering and Physical Sciences. New York: Wiley. pp. 155–165. ISBN 0-471-04934-4. https://archive.org/details/advancedcalculus0000amaz/page/155.

- Kaplan, Wilfred (1973). "Integrals Depending on a Parameter—Leibnitz's Rule". Advanced Calculus (2nd ed.). Reading: Addison-Wesley. pp. 285–288.

External links

- Harron, Rob. "The Leibniz Rule". MAT-203. https://math.hawaii.edu/~rharron/teaching/MAT203/LeibnizRule.pdf.

|